If you are just looking for code for a convolutional autoencoder in python, look at this git. It needs quite a few python dependencies, the only non-standard ones are theano, nolearn, and lasagne (make sure they are up to date). Also there is a section at the end of this post that explains it.

Intro

I have recently been working a project to teach a neural network to count the number of blobs in an image, such as the one below :

I will save the why, and details of this task for a later post. One of the methods I have been looking at is using autoencoders. This means we build a network which takes an image as an input, and tries to reconstruct the same image as an output. Of course, the identity function would do this exactly, but we would not have learned anything interesting about the image that way. There are several methods to prevent this, such as adding some sort of randomness to the image, see here or here for example. We will use an easier method, which is to make one of the layers of the network quite narrow. This means that the network must compress all the data from the image to a small vector from which it must reconstruct the image. We hope that this forces the autoencoder to learn useful features about the image.

The most powerful tool we have to help networks learn about images is convolutional layers. This post has a good explanation of how they work. It seems natural to try to use convolutional layers for our autoencoder, and indeed there is some work in the literature about this, see for example this paper of Masci et al. Unfortunately there is not really all that much online to help someone get started with building one of these structures, so having built one myself, I have provided some code. Our network has the following structure :

There is a lot of things we can change about this skeleton model. After a lot of trial and error, I arrived at a net with one convolutional / pooling layer and one deconvolution / unpooling layer, both with filter size 7. The narrow encoding layer layer has 64 weights. The model trains on an Nvidia 900 series GPU in roughly 20 minutes.

Results

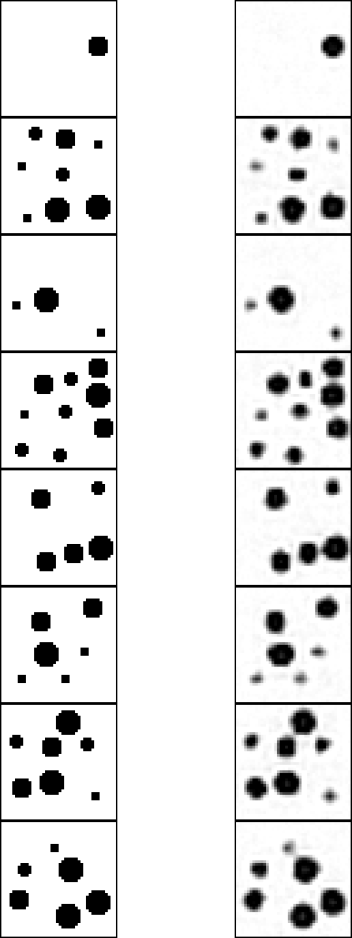

Here are some of the inputs / outputs of the autoencoder, we see it does a fairly decent job of getting the position and size of the circles, though they are not fantastically circular.

We can run pretty much the same architecture on lots of dataset : here is MNIST with 64 units on the narrow layer :

Code discussion

Code can be found at this git, which works on the popular MNIST handwritten digit dataset.

The convolution stage of the network is straightforward to build with neural network libraries, such as caffe, torch7, pylearn etc. etc. I have done all of my work on neural networks in Theano, a python library that can work out the gradient steps involved in training, and compile to CUDA which can be run GPU for large speed gains over CPUs. Recently I have been using the lasagne library built on Theano, to help write layers for neural nets, and nolearn, which has some nice classes to help with the training code, which is generally quite long messy in Theano. My code is written with these libraries, however it should be reasonably straightforward to convert it into code that relies only on Theano.

Unpooling

There are some fancy things one can do here in undoing the pooling operation, however in our net we just do a simple upsampling. That is to say our unpooling operation looks like this :

Unpooling certainly seems to help the deconvolutional step.

Deconvolution layer = Convolutional layer

Let’s consider 1-dimensional convolutional layers. The following picture is supposed to represent one filter of length 3 between two layers :

The connections of a single colour all share a single weight. Note this picture is completely symmetric. That is to say we can consider this as a convolution upwards or downwards. There are two important points to note however. Firstly for deconvolution, we need to use a symmetric activation function (definitely NOT rectified linear units). Secondly, the picture is not symmetric at the edges of the diagram. There are two main border methods used in convolution layers – ‘valid’, which means we only take the inputs from places where the filter can completely fit at the border (which causes the output dimensions to go up, and ‘full’ where we allow the filter to cross the edges of the picture (which causes the output dimensions to go up). I chose to use valid borders for the convolutional and deconvolutional step, but note that this means we have to be careful about the sizes of the layers at each step.

The connections of a single colour all share a single weight. Note this picture is completely symmetric. That is to say we can consider this as a convolution upwards or downwards. There are two important points to note however. Firstly for deconvolution, we need to use a symmetric activation function (definitely NOT rectified linear units). Secondly, the picture is not symmetric at the edges of the diagram. There are two main border methods used in convolution layers – ‘valid’, which means we only take the inputs from places where the filter can completely fit at the border (which causes the output dimensions to go up, and ‘full’ where we allow the filter to cross the edges of the picture (which causes the output dimensions to go up). I chose to use valid borders for the convolutional and deconvolutional step, but note that this means we have to be careful about the sizes of the layers at each step.

We could also try putting the deconvolutional weights to be equal but transposed to the original, as in the classic autoencoder. This is useful because it means that the encoding will be roughly normalised, and has fewer degrees of freedom. I did not try this however, since the simpler approach seemed to work fine.

Great post and thanks for posting your code on git. I had been trying to replicate a neural network used for semantic segmentation that used “unpooling” but was having difficulty figuring out the best approach to reproduce it in theano. “repeat” was what I was missing.

LikeLike

Which version of lasagne/Theano have you tested this with. I have downloaded the newest version, and the code fails in the line “from lasagne.objectives import mse”. If I look at the documentation for lasagne I can’t find mse? Do you know if it has been removed? Can I work around it?

LikeLike

mse has been replaced with squared_error in newer Lasagne versions. I just removed the “from lasagne.objectives import mse” line and it runs..

LikeLike

I also get an error, when it tries to initialize the neural net.

File “mnist_conv_autoencode.py”, line 158, in

ae.fit(X_train, X_out)

File “/opt/local/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages/nolearn/lasagne/base.py”, line 442, in fit

self.initialize()

File “/opt/local/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages/nolearn/lasagne/base.py”, line 283, in initialize

out = self._output_layer = self.initialize_layers()

File “/opt/local/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages/nolearn/lasagne/base.py”, line 380, in initialize_layers

chain_exception(TypeError(msg), e)

File “/opt/local/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages/nolearn/_compat.py”, line 14, in chain_exception

exec(“raise exc1, None, sys.exc_info()[2]”)

File “/opt/local/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages/nolearn/lasagne/base.py”, line 375, in initialize_layers

layer = layer_factory(**layer_kw)

File “/opt/local/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages/lasagne/layers/conv.py”, line 391, in __init__

super(Conv2DLayer, self).__init__(incoming, **kwargs)

TypeError: Failed to instantiate with args {‘incoming’: , ‘name’: ‘conv’, ‘nonlinearity’: None, ‘filter_size’: (7, 7), ‘num_filters’: 32, ‘border_mode’: ‘valid’}.

Maybe parameter names have changed?

LikeLike

I removed the lines containing border_mode in mnist_conv_autoencode.py, which did the trick for me.

LikeLike

Heya, this project has been updated recently. The nn library requirements can be found in the requirements.txt file

LikeLike

Thanks for the code!

What’s the difference between ‘Conv2DLayerSlow’ and ‘Conv2DLayerFast’? Why in the network you used these two together?

LikeLike