(edit1 : this got to the top of r/machinelearning, check out the comments for some discussion)

(edit2 : code for this project can now be found at this repo, discussion has been written up here)

Recently a very impressive paper came out which produced some extremely life-like images generated from a neural network. Since I wrote a post about convolutional autoencoders about 9 months ago, I have been thinking about the problem of how one could upscale or ‘enhance’ an image, CSI-style, by using a neural network to fill in the missing pixels. I was therefore very interested while reading this paper, as one of its predecessors was attempting to do just that (albeit in a much more extreme way, generating images from essentially 4 pixels).

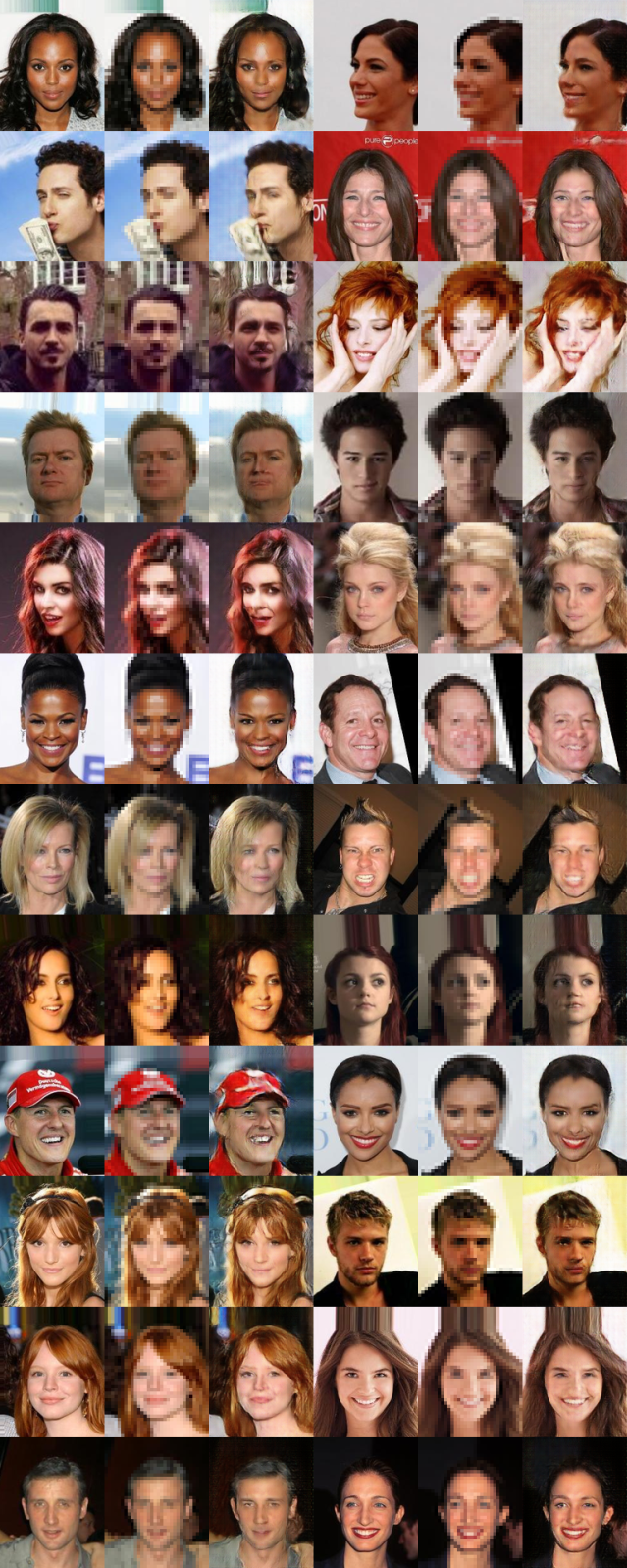

Using this as inspiration, I built a neural network with the DCGAN structure in Theano, and trained it on a large set of images of celebrities. Here is an example of a random outputs, the original images are on the left, the grainy images fed into the neural network in the middle, and the outputs on the right.

N.B. this image is large, you should open and zoom in to really see the detail / lack of detail produced by the DCGAN, I certainly do not claim the DCGAN did phenomenally well on pixel level detail (although occasionally I’d say it did a pretty impressive job, particularly with things like hair)

This is a work in progress, I hope to work on this more in the future. However DCGANs are very tiresome to train, and I want to take a break – so writing up for now seems sensible. I will write more about implementation with code in the near future.

The problem

An old meme on the internet concerns the long running TV show CSI. A common trope of the show is to enhance grainy images (example). This has been spoofed numerous times (my favourite example). While the internet is correct to criticise the CSI treatment, as it breaks the laws of information theory, there is some hope that one could actually enhance a downsampled image a bit. Our brain knows what the real world looks like, so it can fill in gaps within reason. For example consider the following downsampled image.

We can immediately tell that this is a man wearing a black top (Paul Rudd, incidentally). Just looking at the picture it is easy for example, to guess where the pupils of his eyes are, or the contour of his jawline. Our brain can fill in a lot of the blanks of this image because we know what faces look like. Similarly we could hope to try to teach a neural network what a face is supposed to look like, and let it imprint that knowledge onto a grainy image, thereby ‘enhancing’ it. To distance myself as far as possible from CSI here, I am not suggesting that we could 100% reconstruct the original image from the grainy version. Just that it should be possible to construct a realistic looking image, that compresses down to our grainy version if our neural network understands enough about the type of image it is looking at. Note that plain convolutional autoencoders as talked about in the previous post, are not good at generating realistic images – generally edges will get blurred, as the network hedges it’s bets on where significant change points in the image occurs.

GANs

An generative adversarial neural network is a really nice idea to try and generate realistic looking images. The idea is that we will train two networks at the same time, a generator, and a discriminator. The discriminator’s job is to try and distinguish real images from those produced by the generator. The generator’s job is to try and generate images that will fool the discriminator. The hope is that these two networks working against each other, will eventually teach the generator to produce an image that is hard for a human to distinguish from the real thing.

Most DCGANs produce images from a random vector which is generated on the fly. Our situation is slightly different – we are given a grainy image, and would like the generator to produce an image that not only fools our discriminator, but also looks like the original grainy image. We replace the generator basically with an autoencoder-like structure. The grainy image goes in one end, goes through a series of convolutional features (to try and reason about both the small and large scale properties of the image), then is reconstructed with the upscaling structure used in the DCGAN paper. We then downsample the generated image and compare it to the grainy version (we want to make sure that were we to make the original more grainy, it would look like the input picture).

More outputs, and some remarks

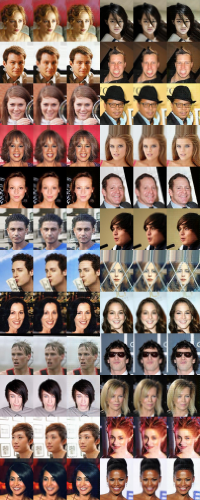

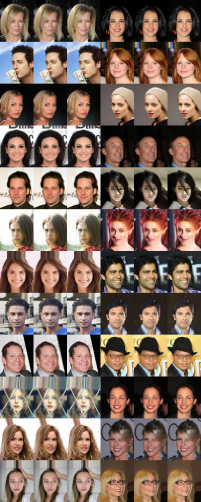

I am showing the results running the network on cropped images from the CelebA dataset (about 200,000 images). Here are some more example outputs of the networks over the last few hundred batches of training.

Remarks

- DCGANs are a real pain to train. I worked in an iPython notebook, and manually stopped and started the training to adjust as I went. They are also pretty unstable. Even after a day of training, it would occasionally do something crazy, like set everyone’s mouth to be open, which looked extremely bizarre.

- It is not clear to me how well the discriminator or generator really dealt with high level features during my experiments, for example the evenness of the eyes, or for imagenet, the wobblyness of lines that should clearly be straight.

- The dataset I used was extremely uniform, deliberately, to make things easier for the generator. I tried working with a more diverse training set, taking subsamples from imagenet. My conclusion was that the network probably needed to be able to store a lot more information to deal with the sheer scale of different textures and shapes it would need to reproduce. Here is an example of the kind of outputs I got. Occasionally decent, but with obvious filter artefacts on most of them. I suspect some new ideas or a massive architecture boost may be required to get this to work anything like as well as the faces.

Pingback: 1 – Enhancing Images Using Deep Convolutional Generative Adversarial Networks

Thank You

fantastic Blog

Good Louck

}{

LikeLike

Have you tried this on images that need restoration? Images with pieces missing, dust spots, ink stains, etc? You can find samples to work on here: https://www.reddit.com/r/estoration/new/

LikeLike

Pingback: Generative Adversarial Autoencoders in Theano | Mike Swarbrick Jones's Blog

clicking on “written up here” forces me to log in to word press, then when I do simply redirects me to my own WordPress blog. Where does one see this discussion?

LikeLike

hmm, weird, here’s the link, not sure if I can be any more direct than this… https://wordpress.com/post/swarbrickjones.wordpress.com/504

LikeLike

That link just redirects me to my own WordPress blog. Maybe someone else will know why this is happening to me?

LikeLike

Are you sure that link is a public one? Does it work for you when not logged in?

LikeLike

https://swarbrickjones.wordpress.com/2016/01/24/generative-adversarial-autoencoders-in-theano/ ??

LikeLike

I think I’ve fixed it. Thanks for the heads up!

LikeLike

Great blog, may i now if you have any examples in actual code walk through?

LikeLike

See the sister post to this : https://swarbrickjones.wordpress.com/2016/01/24/generative-adversarial-autoencoders-in-theano/

LikeLike