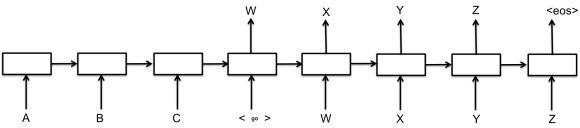

I have been doing a bunch of side projects recently and not writing them up. This one I think may be of some interest to other people since TensorFlow is so in vogue right now. I have been interest in trying sequence to sequence learning for some time, so came up with a toy problem to solve. I actually took some effort to make the notebook legible, and would probably be easiest to just read that to see the problem and code description : see the notebook here. It includes this picture (just to make this post look more interesting) :

Please note this is a work in progress, I will probably write up the problem/solution itself at a later date (but at my current rate of write-ups, perhaps not…)

Hello, Mike!

I think, I’ve just found a bug in your ipynb notebook:

cells = [rnn_cell.DropoutWrapper(

rnn_cell.BasicLSTMCell(embedding_dim), output_keep_prob=0.5

) for i in range(3)]

In the code above you pass output_keep_prob=0.5 once and forever, so dropout works even when you are using your model to make predict on new data!

You could replace output_keep_prob=0.5 with placeholder, to pass 0.5 while training and 0.0 otherwise!

LikeLike

I mean,

dropout_ph = tf.placeholder(tf.float32, shape=…, )

DropoutWrapper(basic_cell, output_keep_prob=dropout_ph)

and then:

[train] = session.run(…., { inputs: X, dropout_ph: [0.5, 0.5, 0.5] } )

[predict] = session.run(…., { inputs: X, dropout_ph: [0.0, 0.0, 0.0] } )

LikeLike

Hey Aleksei, you’re absolutely right, sorry – I fixed this on github, then forgot to thank you here – so thanks for the heads up!

LikeLike

Hi Mike,

Thanks so much for posting this! I’m just starting to dip my toes into seq2seq models, and this example is great:) Btw, it looks like you import defaultdict but never use it.

LikeLike